5 minutes, 9 seconds

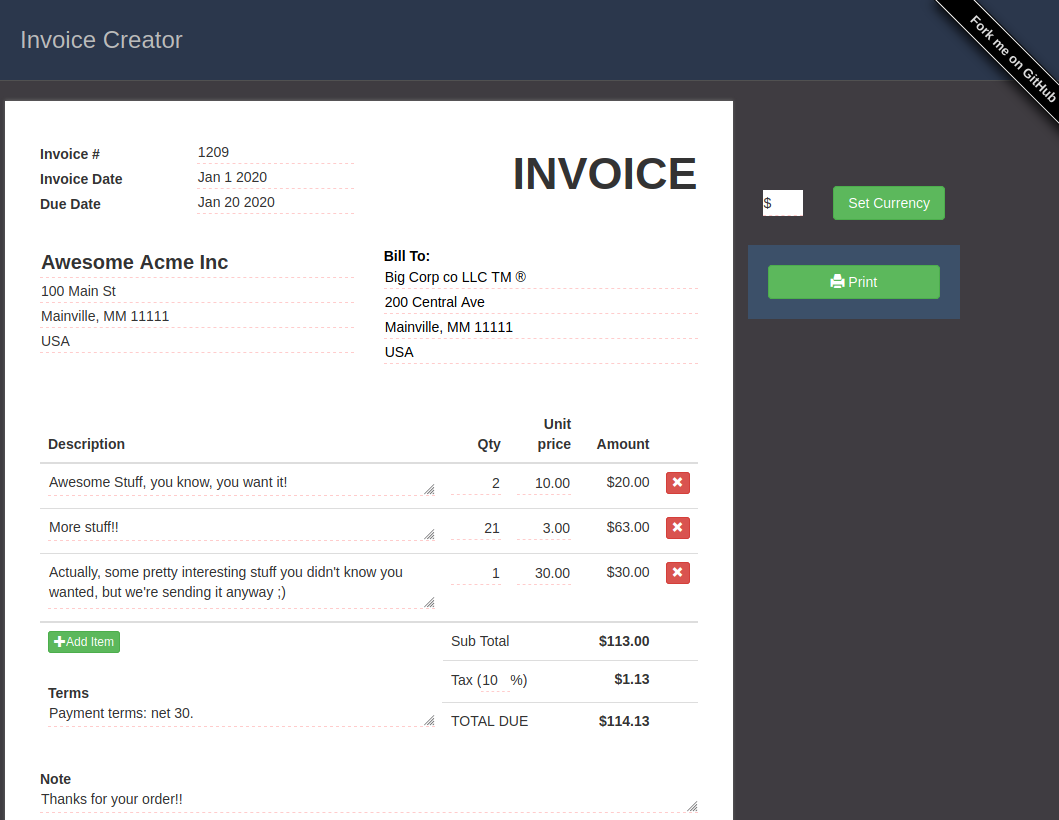

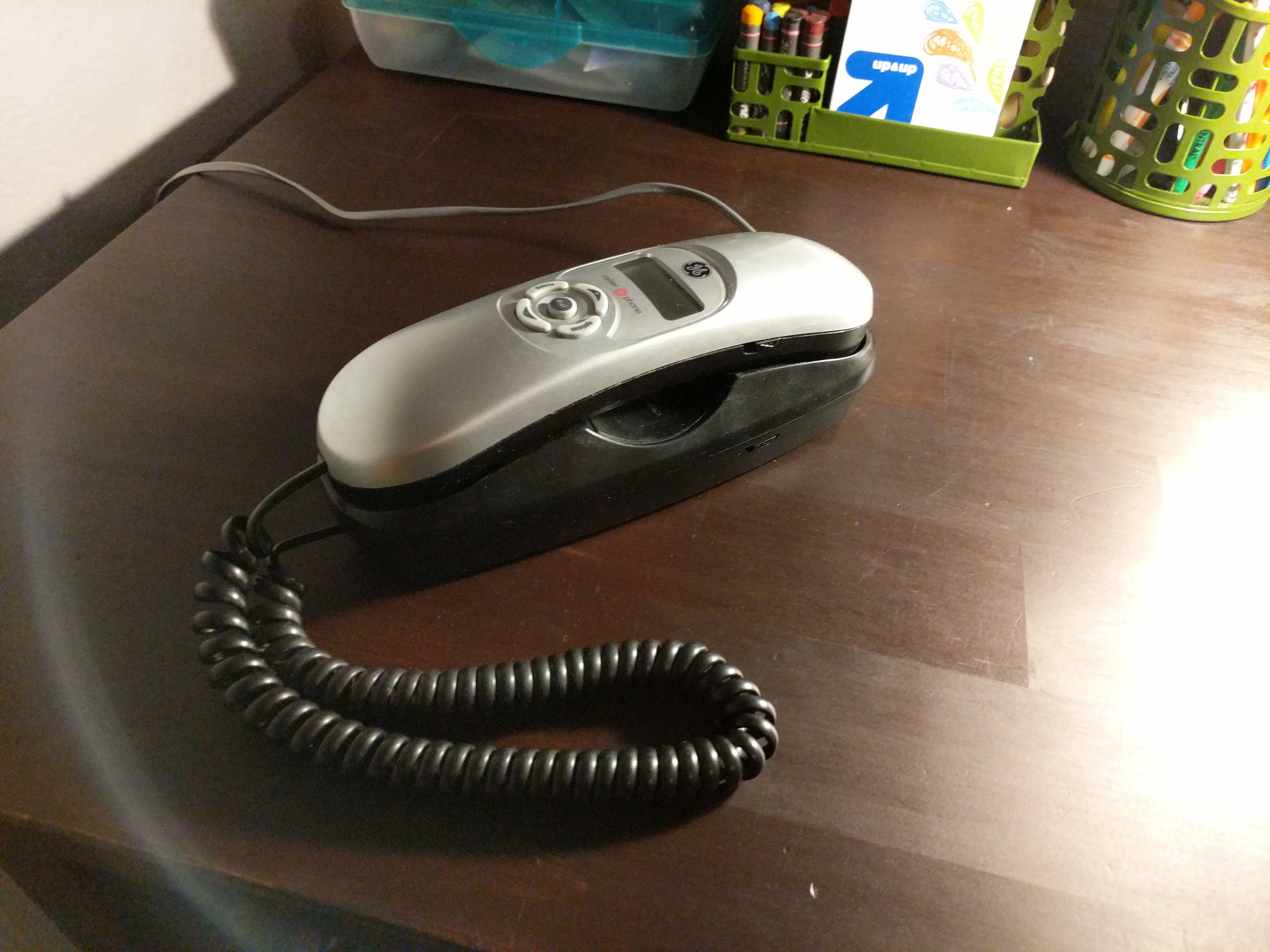

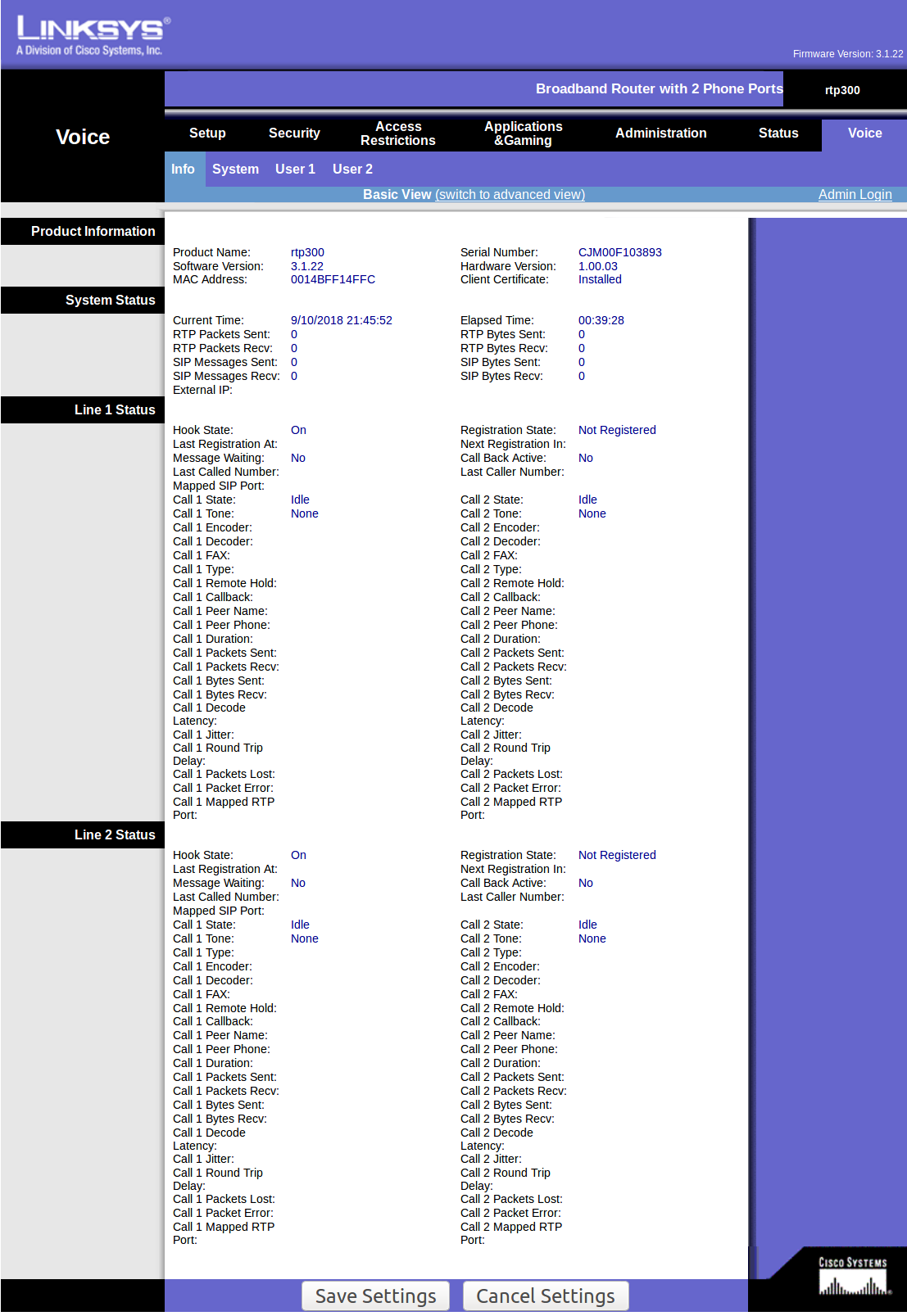

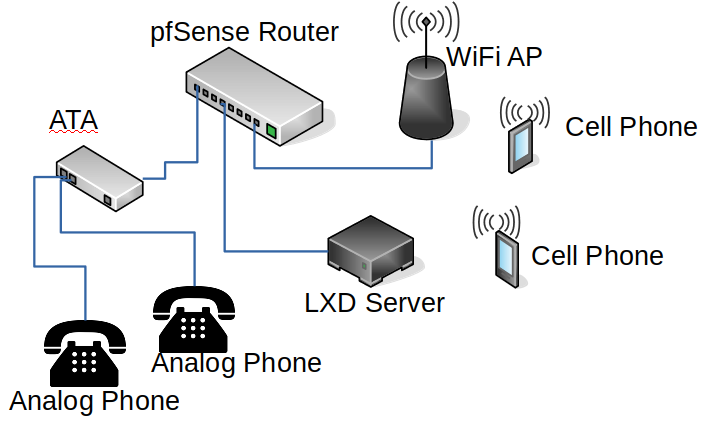

You may remember that a while ago, I set up a fun little PBX for my kids. It was awesome! That setup allowed my partner and I to use our cell phones as SIP clients to the Asterisk instance running on the LXD server and my kids each had an analog phone going through the ATA:

Since then, I decided it would actually be pretty cool to have a phone in our kitchen so we could call upstairs to the kids. If I was gonna wire up 1 phone, I may as well wire up 3 phones and I may as well make them all awesome. Yes, you know it, I’m talking about deploying 3 of the venerable Cisco 7960s:

These phones, according to my research, will be 20 years old in August of next year. That’s 10 years older than my oldest kid. That’s….really old! Especially in internet time! Yet, these phones are indeed venerable. They simply work and won’t quit. Even when they do quit, all you need is a little cardboard and they’ll keep on goin’. I had a few laying around and they’re often posted for sale for $5-15 online. I won’t get into it in this post, but it is some what of an art to get them on the right SIP (not SCCP!) firmware. This guide has some good info as does Loligo’s. tl;dr – set up an TFTP server, set your DHCP with the TFTP option, tie your phones MAC to the right conf file, and away you go. Feel free to email me if you get stuck!

But, we’re getting ahead of ourselves. Before we could plug the phones in though, we had to string some Ethernet. This means that my kids learned the important life skill every 7 year old needs to know, how to crimp RJ45 cable ends:

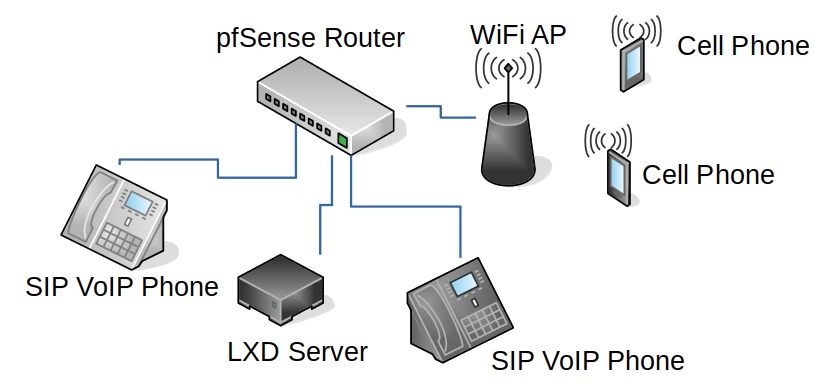

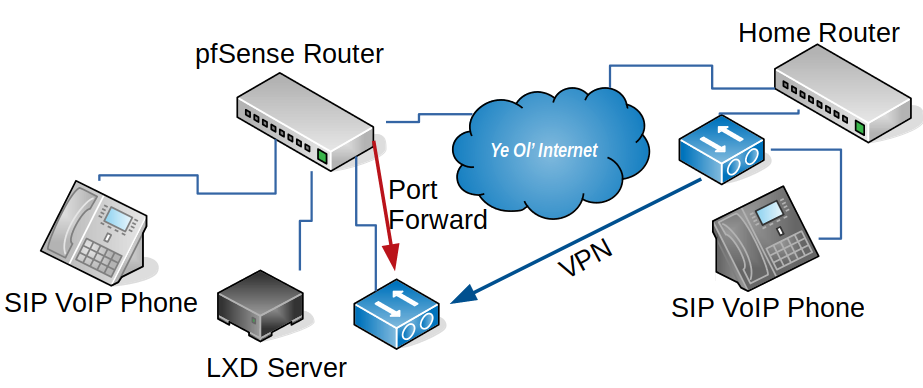

After all 3 phones were physically connected to the network (and running SIP firmware per above), they could connect to the Asterisk instance on the LXD box. Now our set up looks like this (only two SIP phones are shown, we have 3 (actually I put one on my office desk recently, so now we have 4 :))

At this point, I nuked the vanilla Asterisk instance and installed the latest version of FreePBX. Now the kids no longer get to learn about busy signals, instead they get to learn about conference calls, hold music (but not THAT hold music sadly), voice mails and a house wide paging system. It is SO much fun! And, honestly, it’s super practical too.

I was talking to my sister recently and she’d heard the kids talk about their phones and how much they loved them. I asked if she wanted one at her house. Given our kids don’t have email or a cell phone, this would give my sister a direct way to contact her niece and nephew with no middle parent man. Let’s do it! But…how?

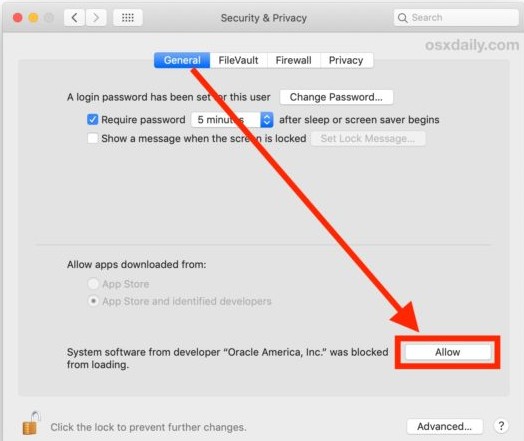

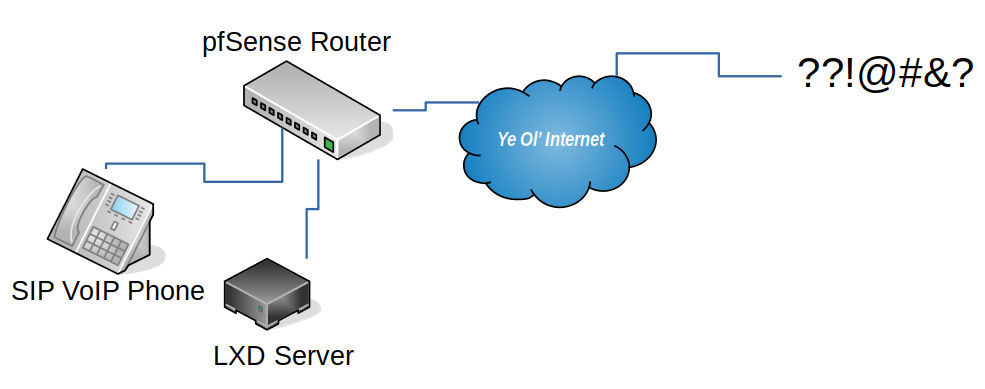

Let’s assume we just go for it. We’ll just program another phone we picked up off craig’s list to talk to the public IP of my house (no static IP, but that’s what Dynamic DNS is for), and we’ll punch a whole in the NAT Firewall Router thingy (a fanless doodad running pfSense). Asterisk uses SIP as we know, which is on port UDP 5060, so it’s pretty easy. We do a port forward like this – see red arrow:

This is a bad idea. On so many levels. First off, these hella old phones use only unencrypted tech. I mean, why use SSH when you have telnet? Why use TLS when you have good ol’ HTTP? SIP itself is unencrypted which means that any one of the many hops the traffic goes through will be able to trivially sniff the UDP packets used to authenticate against the Asterisk instance. Not only could they get on to my LAN, they could listen to all the calls. Nitpickers may note that Wikipedia speaks of SIP encryption – but that’s impossible on these old phones.

These types hacks are no theoretical either. Security researcher Ang Cui has made quite a name for him with all the vulns he’s found in these phones. In a Defcon 21 talk called “Stepping P3wns: Adventures in full spectrum embedded exploitation (and defense!)” he demonstrated how sending a resume (PDF) which would get printed on a (vulnerable) HP printer would allow a reverse tunnel to open up which could then be used hack the phone on the desk and silently enable the mic so he could listen to you discuss his “resume”. Awesome!! And scary ;) The same nitpicker as above will not this was the 7961, not the 7960 – still my OLDER phone is very likely less secure than the NEWER one.

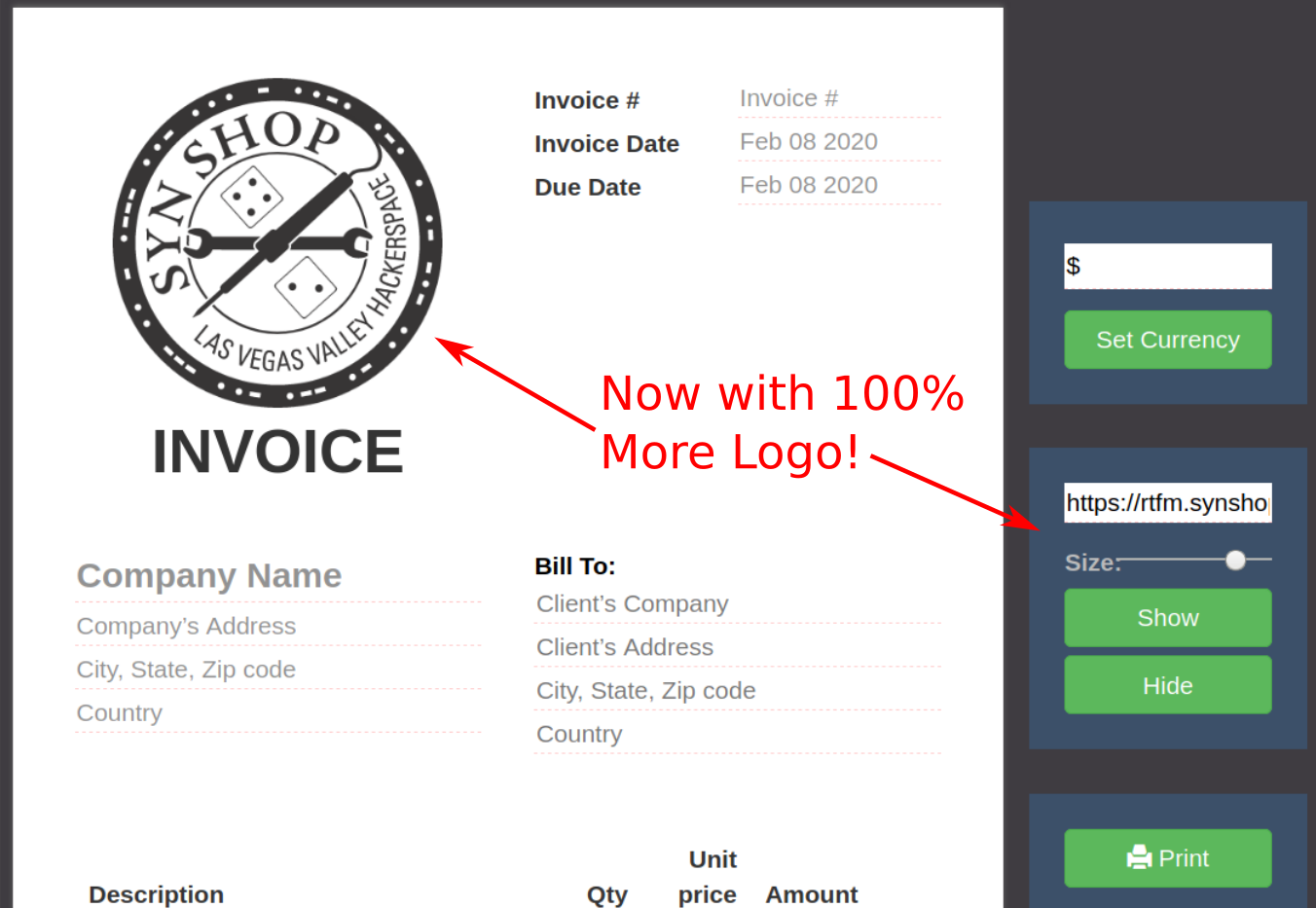

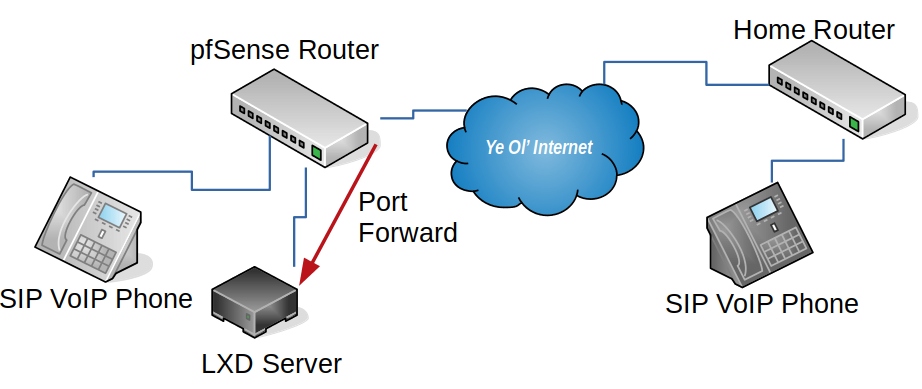

Maybe I should encrypt the traffic? Like, what if we put a VPN server behind the firewall, do a port forward to it, and a VPN client at the remote “office”? That way the SIP traffic is never seen on the internet! Yeah!! Very similar to the diagram above, but with two more devices:

Now instead of unencrypted packets being forwarded to the Asterisk server, we only have encrypted packets being forwarded to the VPN server (again, see red arrow below). Further the remote phone uses the VPN (blue arrow) and thinks it’s on my home network – un-routable IP and all!

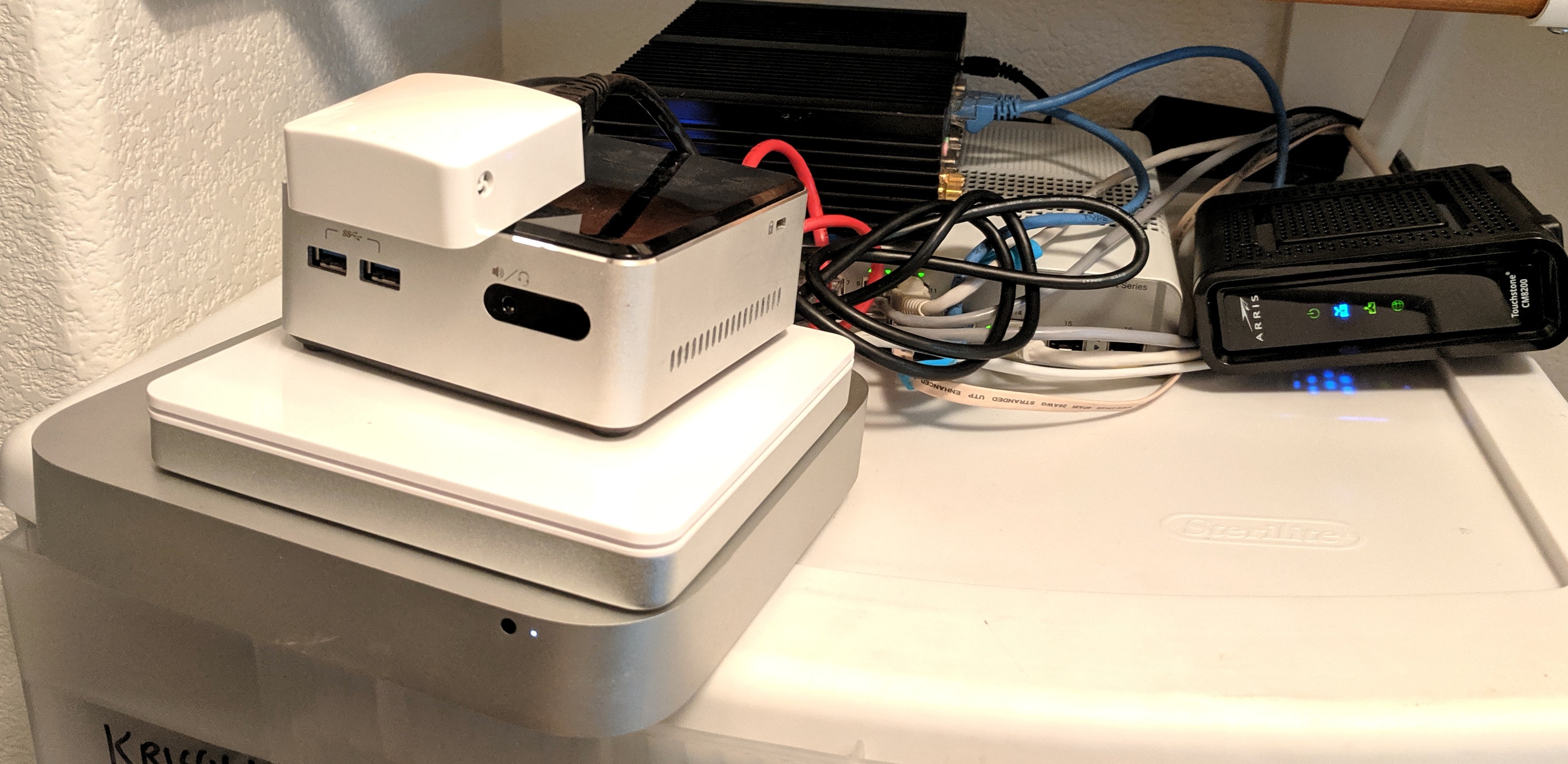

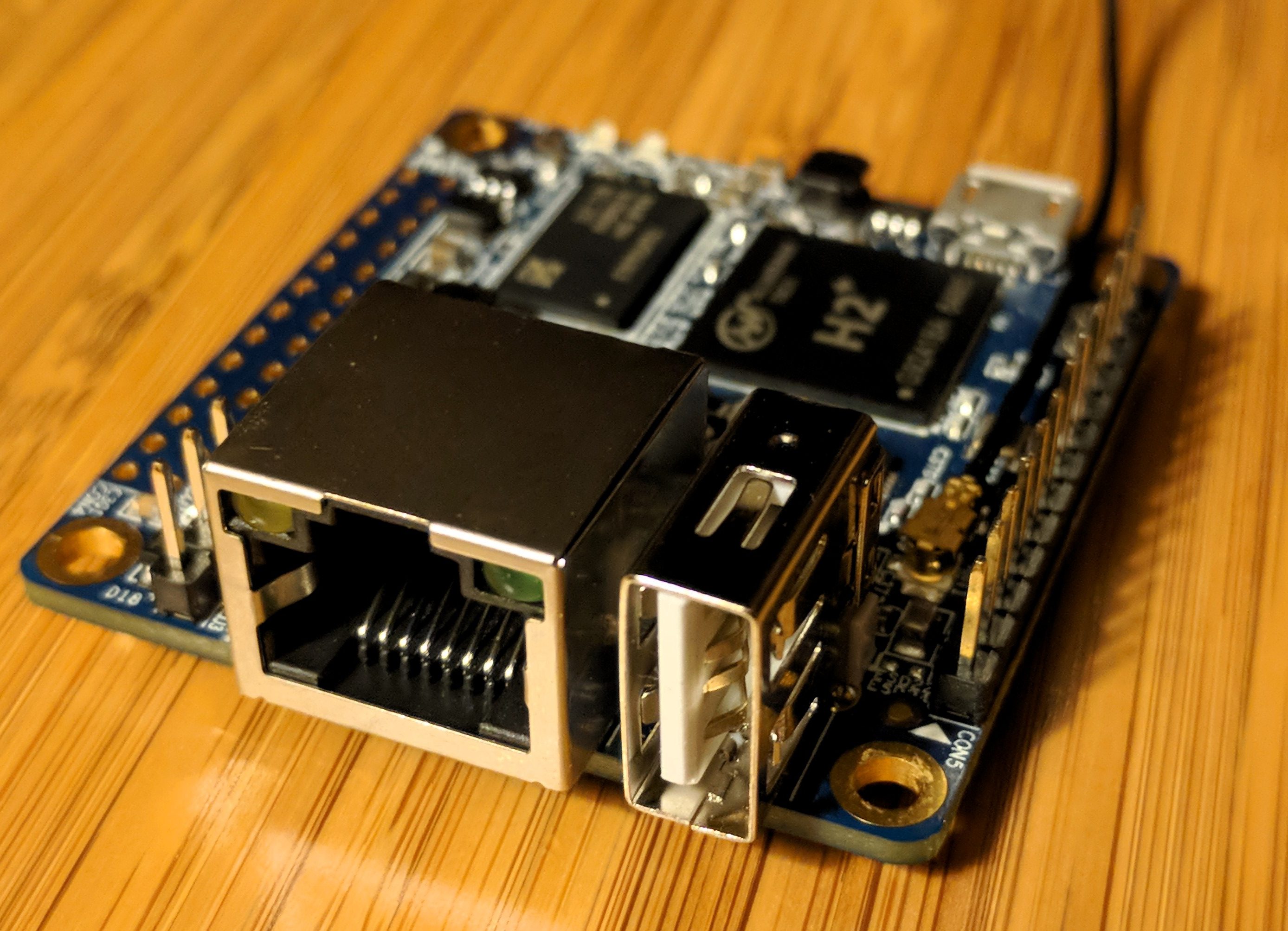

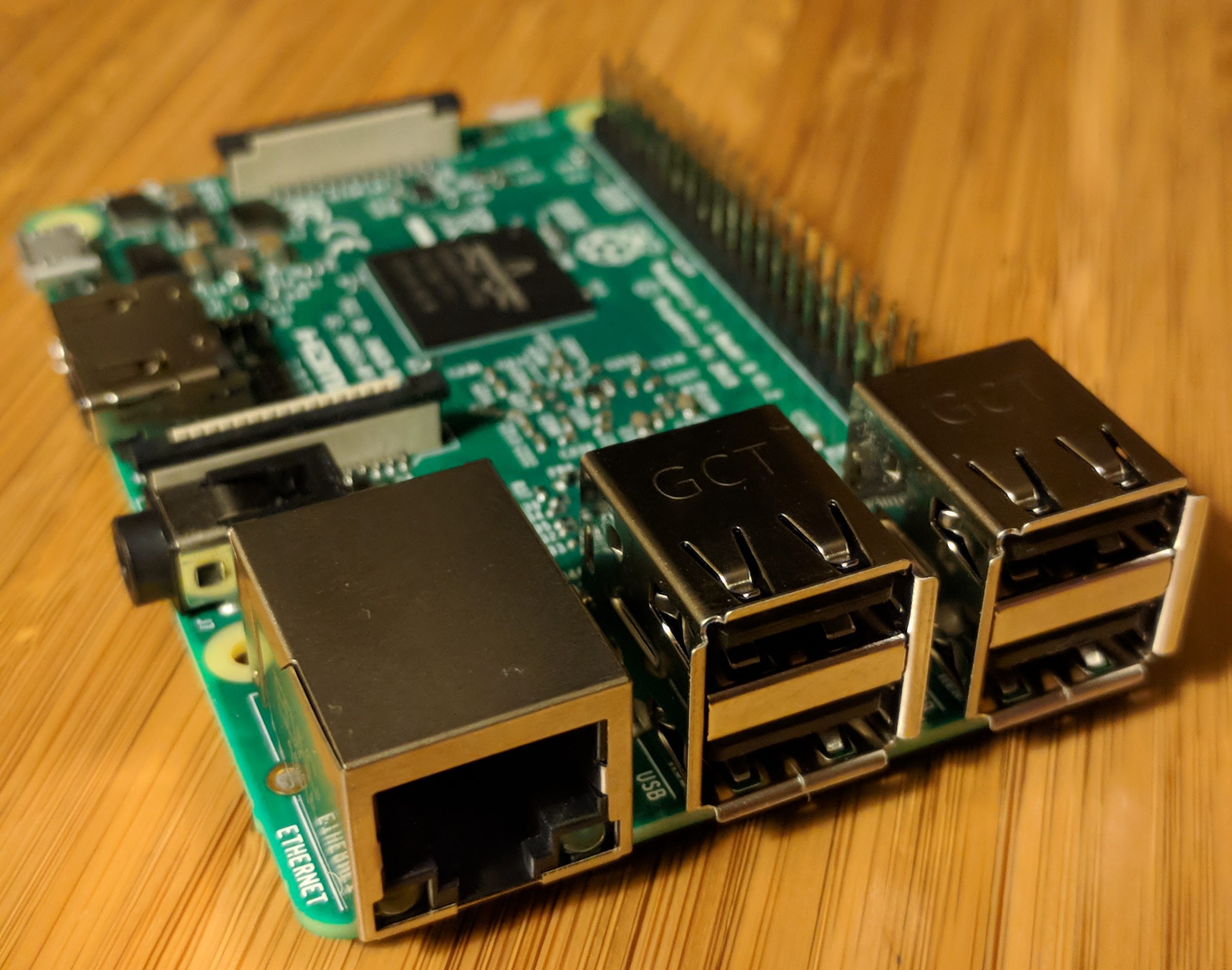

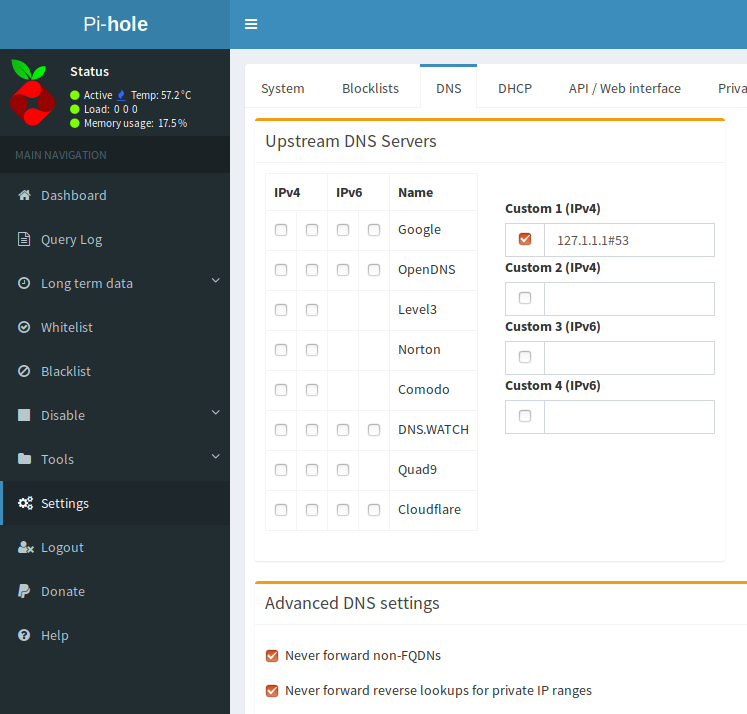

But where as we spent $15 before, we’ve reused existing phones with the new setup and VPNs sound hard and possibly expensive to deploy. Maybe it can’t be done the cheap-cheap? Dun dun dun!! Enter Wireguard! This insanely simple, radically secure and Sys Admin friendly VPN is great. I’ve deployed a bunch of instances now and can’t get enough of it. But what about the price of the hardware? Here’s where the final piece of this Asterisk, LXD, Wireguard VPN and Remote “Office” puzzle is put in place:

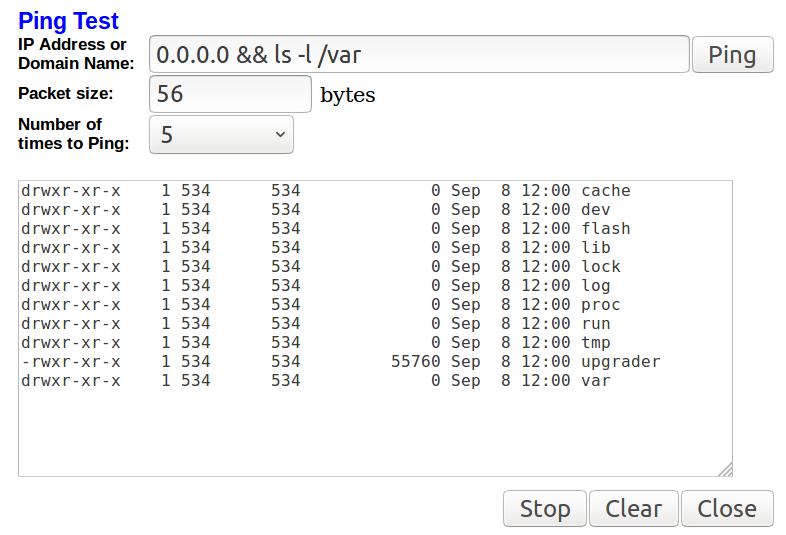

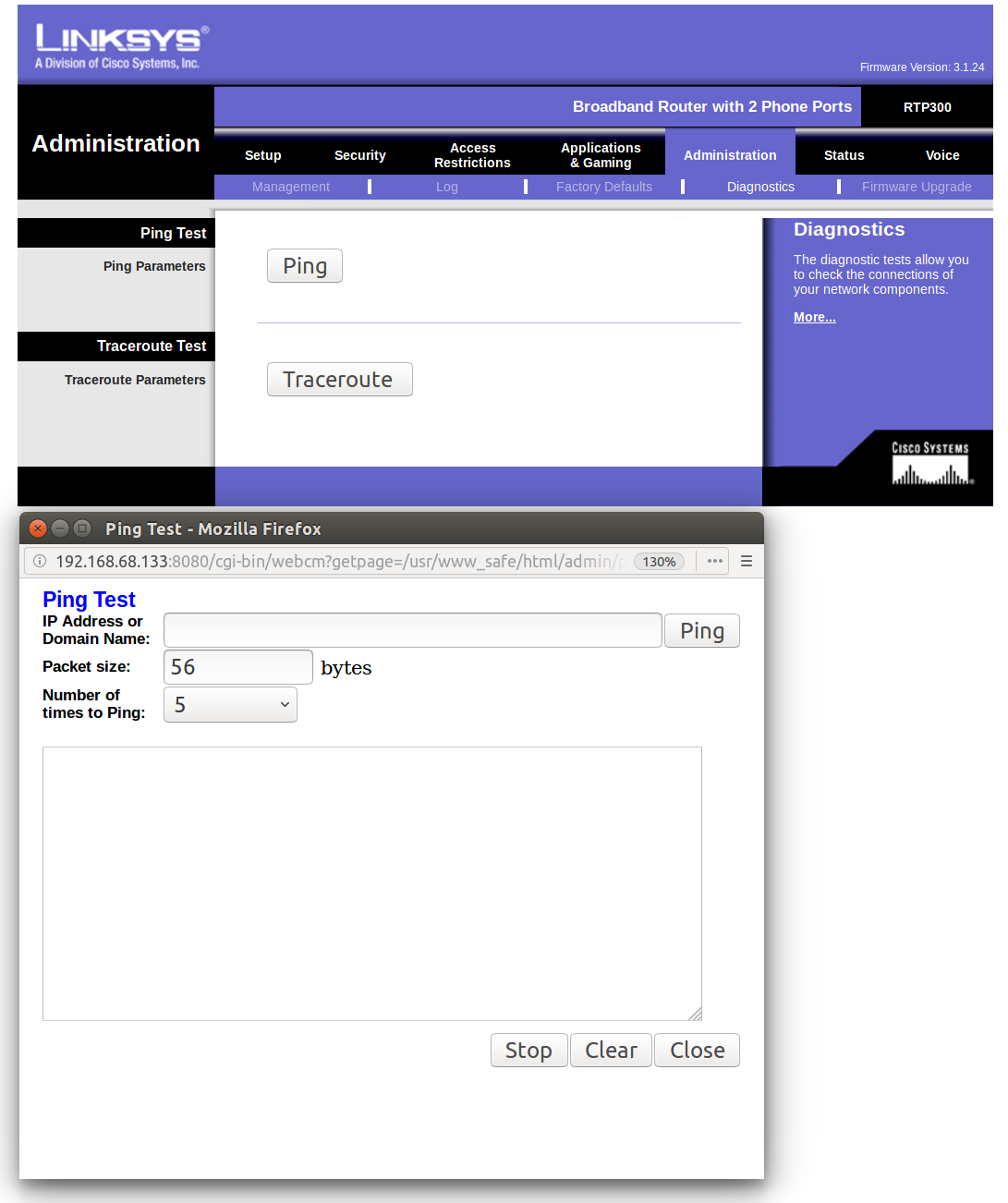

For just over $20 shipped you too can have an awesome VPN server aka the GL-MT300N-V2 made by GL Technologies (aka GL.iNet). They also work as clients too! While we’ve had to reboot the remote VPN and Phone once or twice, we’ve had months of up time using this set up. The router supports a slick GUI (what I ended up using) but if you’re retro, you can do it all manually too.

An added bonus to this whole set up is by adding a Wireguard client on my phone, I can now VPN in and use the SIP client where ever I am to call or be called.

Postscript: A few weeks ago we decided we’d experiment with letting the kids be at home alone for short periods. Per above, they have no cell phones and we have no land line. But with a perfectly good PBX in place already, I spent $4 getting a LocalPhone SIP trunk. We now pay $0.005 per outgoing call. Yes, you read that right, half a cent per call. Read more over at Ward Mundy’s site!